Requirements:

-Google Webmaster Tools

-Google Analytics

Recently, I noticed a lot of spammy bot traffic to my blog that has not only corrupted the quality of my data, but has also indicated that these bots could be hurting my SEO rankings. As a result, I decided to take action to ensure that my data and SEO health would be in good shape from now and into the future. There are many routes that you can take to prevent bot traffic from appearing in your data and hurting your SEO rankings and in this blog post I will discuss one route that I took and how it has already seen great results. To combat my issues, I used two tools, the disavow tool in Google Webmaster Tools and the Bot and Spider exclusion feature in Google Analytics.

The Disavow Tool in Webmaster Tools is a feature that is used to tell Google’s bots to not consider traffic/linking from certain domains when ranking your website and its internal pages. It is a very simple and easy to use tool that can be extremely useful when you observe sudden drops in your search rankings due to bot traffic.

The Bot and Spider Exclusion feature in Google Analytics is fairly new and blocks any hits to your websites pages that are from domains included in a massive list of red-flagged spam and web scrapping websites.

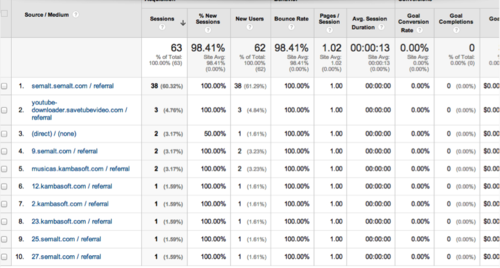

In my case, I noticed a lot of traffic from semalt.com, savetubevideo.com and kambasoft.com. After conducting some research I learned that these are web-scrapping websites. This is traffic that I did not want to be counted against my website rankings and reported on, in Google Analytics. Below is what my traffic looked like.

Here are the actions that I took to ensure that my website and data would become healthy again:

Disavow Tool

1) Go to www.google.com/webmasters/tools/disavow-links-main

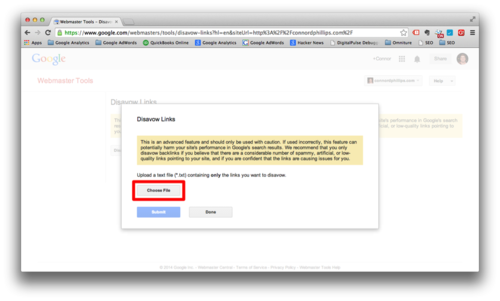

2) Click on the “Disavow Links” button. This will bring up an AJAX window that notifies the user that this is an advanced feature and that they should proceed with caution. After you read through the warning, click “Choose File”.

3) Create a .txt file with the domains/pages that you would not like to be counted towards your search engine rankings. The screenshot below shows what my file looked like. Note that the “#” is used for file commenting. This is not looked at by Google’s bots and is used primarily to provide information about certain parts of the file.

-In order to disavow individual pages, you simply write in the URL of the page you want to disavow. e.x. "http://spamsite.com/spam-page"

-In order to disavow whole domains, you need to include “domain:” and the URL of the domain. e.x. "domain:http://spamsite.com"

4) Now that you have your file ready, it is time to upload the file. Select the file from your computer and click “Submit”.

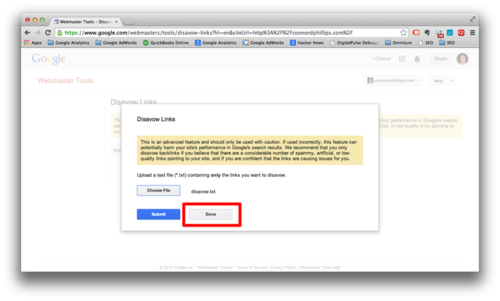

5) Now that the file has been uploaded, click “Done” and you are finished using the disavow tool. Google will now not look at any site linking or traffic from these domains when ranking your website and its pages.

Bot and Spider Exclusion feature

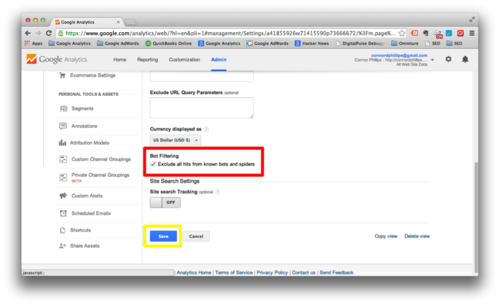

1) If you would like to make sure that Google Analytics is also doing its best to exclude data from these types of sites, then go into your account and click on “Admin” in the navigation section and then click “View Settings” under the “View” column.

2) In “View Settings” scroll down to the bottom of the page and make sure that “Exclude all hits from known bots and spiders” is checked and click “Save”.

The use of both of these tools should leave you without any major worries of having your SEO score and data corrupted by bots and spiders. They aren’t fool proof, but both tools are quite possibly the most effective tools out there to make sure that your site is in the best shape as possible.